Introduction to Compilers

Overview of Compiler and Translator

Compiler:

A compiler is a crucial software tool used in the field of computer science and programming. It is responsible for translating high-level programming code written by developers into a lower-level language or machine code that can be executed by a computer's central processing unit (CPU). The process of compilation involves several steps, such as lexical analysis, syntax analysis, semantic analysis, and code generation.

The key functions of a compiler include:

-

Lexical Analysis: This is the first phase of compilation where the source code is broken down into tokens, which are the smallest meaningful units of code. These tokens are then analyzed for syntax and semantics.

-

Syntax Analysis: In this phase, the compiler checks whether the code follows the grammar rules of the programming language. It builds a parse tree to represent the syntactic structure of the program.

-

Semantic Analysis: This phase checks for the correctness of the code in terms of its meaning. It ensures that the program adheres to the language's semantics and enforces type checking rules.

-

Intermediate Code Generation: The compiler may generate an intermediate representation of the code that is closer to machine code but more human-readable than the final machine code.

-

Optimization: Many compilers include optimization steps to improve the efficiency of the generated code. This can involve reducing redundancy, simplifying expressions, and rearranging code.

-

Code Generation: In the final phase, the compiler generates the machine code or assembly code that can be executed by the computer's CPU.

A well-optimized compiler can significantly enhance the performance of a program and make it more efficient.

Translator:

A translator is a more general term that encompasses a variety of tools used to convert code or data from one form to another. Compilers are one type of translator, but there are also interpreters, assemblers, and more. Here's a brief overview of some common types of translators:

-

Compiler: As discussed above, a compiler translates high-level programming code into machine code or assembly code.

-

Interpreter: An interpreter processes code line by line and executes it directly without generating a separate machine code file. Interpreters are often used in scripting languages like Python or JavaScript.

-

Assembler: An assembler translates assembly language code into machine code. Assembly language is a low-level language that is specific to a particular CPU architecture.

-

Loader and Linker: These tools prepare executable programs for execution. A loader loads programs into memory, while a linker combines multiple object files into a single executable program.

-

Preprocessor: A preprocessor is used to process code before it is passed to the compiler. It can perform tasks like including header files, performing macro substitutions, and conditional compilation.

Types of Compiler

Compilers can be categorized into different types based on their functionality and target languages. Here are some common types of compilers:

-

Single-Pass Compiler: A single-pass compiler reads the source code and generates machine code in a single pass. It is efficient in terms of memory usage but may not perform extensive optimizations.

-

Multi-Pass Compiler: A multi-pass compiler goes through the source code multiple times, each pass performing a specific analysis or transformation. This approach allows for more advanced optimizations and error checking.

-

Front-End Compiler: The front-end compiler is responsible for the initial phases of compilation, such as lexical and syntax analysis. It generates an intermediate representation of the code.

-

Back-End Compiler: The back-end compiler takes the intermediate representation from the front-end and performs code optimization and code generation to produce the final machine code.

-

Just-In-Time (JIT) Compiler: JIT compilers are used in languages like Java and .NET. They compile code at runtime, translating it into machine code just before execution. This can lead to performance improvements.

-

Cross Compiler: A cross compiler is designed to generate machine code for a different architecture or platform than the one on which it runs. This is useful for developing software for embedded systems or different hardware architectures.

-

Bootstrapping Compiler: A bootstrapping compiler is a compiler that is used to compile itself. It starts with a minimal version of the compiler and gradually evolves into a more feature-rich compiler.

-

High-Level Language Compiler: These compilers translate high-level programming languages like C, C++, or Java into machine code or assembly language.

-

Low-Level Language Compiler: These compilers are used to translate low-level languages like assembly language into machine code.

The choice of compiler type depends on factors such as the programming language, the target platform, and the desired level of optimization.

Analysis of the Source Program

Analysis of the source program is a critical phase in the compilation process. It involves multiple steps, each with its own purpose:

-

Lexical Analysis: Lexical analysis is the first step in which the source code is broken down into tokens. Tokens are the smallest units of code that have meaning, such as keywords, identifiers, literals, and operators.

-

Syntax Analysis: Syntax analysis follows lexical analysis and is concerned with the grammatical structure of the code. It ensures that the code follows the rules of the programming language's grammar. If the code violates the grammar rules, syntax errors are reported.

-

Semantic Analysis: Semantic analysis checks the code for semantic errors. It enforces type checking rules, checks for variable declaration and usage, and ensures that the code adheres to the language's semantics. Semantic errors can include type mismatches or undefined variables.

-

Intermediate Code Generation: In some compilers, an intermediate representation of the code is generated after analysis. This intermediate code is closer to machine code but more human-readable than the final machine code. It serves as a bridge between the source code and the target machine code.

-

Optimization: Optimization is an optional phase where the compiler analyzes the intermediate code to improve the efficiency of the generated code. It can involve eliminating redundancy, simplifying expressions, and rearranging code for better performance.

-

Code Generation: The final step is code generation, where the compiler produces the machine code or assembly code that can be executed by the computer's CPU.

The analysis phase ensures that the source code is processed, checked for correctness, and prepared for code generation.

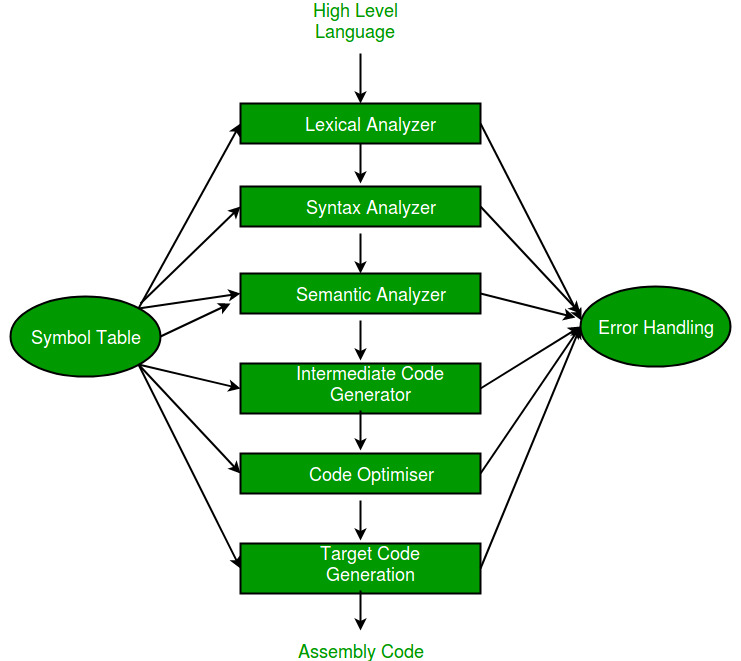

The Phases of a Compiler

A compiler typically operates in multiple phases, each with a specific function. The phases of a compiler can be broadly categorized into two parts: the front-end and the back-end.

Front-End Phases:

-

Lexical Analysis: The source code is broken down into tokens, and irrelevant characters such as whitespace and comments are discarded.

-

Syntax Analysis: The grammar of the programming language is checked to ensure that the code is structurally correct. A parse tree or abstract syntax tree is generated.

-

Semantic Analysis: The meaning of the code is analyzed to ensure it adheres to the language's semantics. This phase includes type checking and variable resolution.

-

Intermediate Code Generation: In some compilers, an intermediate representation of the code is generated. This intermediate code is a bridge between the source code and the target code and is more amenable to optimization.

Back-End Phases:

-

Optimization: The intermediate code is analyzed and transformed to improve the efficiency of the generated code. Optimization can include constant folding, loop unrolling, and many other techniques.

-

Code Generation: The final machine code or assembly code is generated. This code is specific to the target hardware or architecture.

Common Interactions:

These phases often interact with each other. For example, syntax analysis provides the necessary information for the generation of parse trees, which are used in semantic analysis. Optimization can benefit from information gathered during both syntax and semantic analysis.

Grouping of Phases

The phases of a compiler are typically grouped into two main components: the front-end and the back-end.

Front-End:

The front-end of a compiler is responsible for the initial phases of compilation, including lexical analysis, syntax analysis, and semantic analysis. It focuses on understanding the structure and meaning of the source code. The main goal of the front-end is to produce an intermediate representation of the code, which can be easier to work with and is more amenable to optimization.

Back-End:

The back-end of a compiler deals with optimization and code generation. It takes the intermediate representation generated by the front-end and produces the final machine code or assembly code that can be executed on the target platform. The back-end is highly architecture-dependent and needs to be tailored to the specific hardware or instruction set.

The front-end and back-end are often separated to allow for portability. By keeping the front-end consistent and adapting the back-end for different target architectures, compilers can be used to generate code for various platforms.

Cousins of the Compiler

In addition to compilers, there are several related tools and concepts that play important roles in the software development process. Some of the cousins of the compiler include:

-

Interpreter: An interpreter is a tool that directly executes source code without generating a separate machine code file. It reads and executes code line by line. Interpreters are commonly used for scripting languages and in some educational environments.

-

Assembler: An assembler is a tool that translates assembly language code into machine code. It is often used for programming low-level operations, especially for specific hardware platforms.

-

Loader and Linker: These tools are responsible for loading and linking multiple object files and libraries to create an executable program. They are important in the context of multi-file projects and large software systems.

-

Preprocessor: The preprocessor is responsible for processing code before it is passed to the compiler. It performs tasks such as including header files, performing macro substitutions, and conditional compilation.

-

Just-In-Time (JIT) Compiler: A JIT compiler is used in some programming environments, such as Java and .NET. It compiles code at runtime, translating it into machine code just before execution. This can lead to performance improvements.

-

Cross Compiler: A cross compiler is designed to generate machine code for a different architecture or platform than the one on which it runs. This is useful for developing software for embedded systems or different hardware architectures.

-

Bootstrapping Compiler: A bootstrapping compiler is a compiler that is used to compile itself. It starts with a minimal version of the compiler and gradually evolves into a more feature-rich compiler.

These tools and concepts are integral to the software development process and help developers create, test, and run their programs on various platforms and architectures.

Design of Lexical Analysis

Lexical Analysis is the initial phase of a compiler that focuses on breaking down the source code into smaller units called tokens. The design of lexical analysis involves the following key considerations:

-

Token Recognition: In this phase, the lexical analyzer identifies different types of tokens in the source code, such as keywords, identifiers, literals (numbers and strings), and operators.

-

Regular Expressions: Lexical analyzers often use regular expressions to define patterns for recognizing tokens. Each token type has a corresponding regular expression that describes its structure.

-

Lexical Rules: The design of the lexical analyzer includes defining lexical rules for the programming language. These rules specify how to handle whitespace, comments, and special characters.

-

Symbol Tables: The lexical analyzer may create symbol tables to keep track of identifiers and their associated information, such as data types and memory addresses.

-

Error Handling: Lexical analyzers need to handle errors gracefully. When they encounter invalid or unexpected input, they should report errors and recover from them if possible.

-

Performance Optimization: Efficient lexical analyzers are crucial for speeding up the compilation process. Techniques like automata-based lexers and buffer management are used to improve performance.

-

Integration with Syntax Analysis: The output of the lexical analyzer (the stream of tokens) is typically passed to the syntax analyzer, which uses it to build a parse tree or abstract syntax tree. The design should ensure compatibility between the two phases.

The design of lexical analysis is language-specific, as each programming language has its own set of rules and keywords that need to be recognized.

Compiler Writing Tools

Compiler development can be a complex and challenging task, but various tools and frameworks are available to simplify the process. These tools assist in different phases of compiler construction. Here are some common compiler writing tools:

-

Lexical Analyzer Generators: Tools like Lex and Flex generate lexical analyzers based on regular expressions and token definitions. They automate the creation of the lexical analysis phase.

-

Parser Generators: Tools like Yacc and Bison assist in generating parsers for the syntax analysis phase. They take grammar rules as input and produce code for constructing parse trees or abstract syntax trees.

-

Abstract Syntax Tree (AST) Generators: These tools help build and manipulate abstract syntax trees. ANTLR (ANother Tool for Language Recognition) is a widely used tool for creating parsers and ASTs.

-

Code Generation Frameworks: Some compilers use code generation frameworks to generate machine code or assembly code. LLVM (Low-Level Virtual Machine) is an example of a framework used for this purpose.

-

Intermediate Code Generators: Tools like IR (Intermediate Representation) generators assist in creating intermediate code representations of the source code. These representations are closer to machine code and can be used for further optimizations.

-

Optimization Tools: Various optimization frameworks and libraries are available for improving the efficiency of the generated code. Examples include the GCC (GNU Compiler Collection) optimizer and LLVM's optimization passes.

-

Cross-Compilation Tools: For developing software for different architectures or platforms, cross-compilation tools are essential. They enable the creation of code for target systems that may be different from the development system.

-

Integrated Development Environments (IDEs): Many modern IDEs include built-in compiler support, making it easier for developers to write, compile, and debug code seamlessly.

The choice of tools depends on the programming language, the target platform, and the level of control and customization required in the compiler construction process.

Cross Compiler - Bootstrapping

Cross Compiler:

A cross compiler is a type of compiler that generates code for a different target architecture or platform than the one on which it runs. Cross compilers are commonly used in embedded systems development, where the development environment may be distinct from the target hardware. Key points about cross compilers include:

-

Development-Target Mismatch: Cross compilers are used when there is a mismatch between the development environment (where the software is created) and the target environment (where the software will run). This mismatch may involve differences in hardware architecture, operating system, or system constraints.

-

Embedded Systems: Cross compilers are prevalent in embedded systems development, as embedded devices often have custom or specialized hardware with limited resources. Developing software directly on these devices can be impractical.

-

Platform Independence: Cross compilers allow developers to write software on one platform (e.g., a desktop computer) and compile it for a different platform (e.g., an embedded system).

-

Efficiency: Cross compilers can generate optimized code for the target platform, taking advantage of specific hardware features. This can result in more efficient and faster-running software on the target device.

-

Examples: Some popular cross compiler tools include the GNU Compiler Collection (GCC), which supports cross compilation for a wide range of architectures, and tools like ARM GCC for ARM-based embedded systems.

Bootstrapping:

Bootstrapping, in the context of compilers, refers to the process of using a minimalistic version of the compiler to compile a more feature-rich version of itself. The term "bootstrapping" is derived from the idea of "pulling oneself up by one's bootstraps." Key points about bootstrapping include:

-

Self-Compiling: In bootstrapping, the initial compiler, often referred to as the "bootstrap compiler," is used to compile a more advanced version of the compiler. This advanced version can have additional features and optimizations.

-

Iterative Process: Bootstrapping is typically an iterative process. The bootstrap compiler compiles a more capable version of the compiler, which can, in turn, compile an even more advanced version. This process can be repeated as needed.

-

Improvement: The goal of bootstrapping is to gradually improve the compiler's capabilities. With each iteration, the compiler becomes more feature-rich, efficient, and capable of handling a broader range of programming language constructs.

-

Quality Assurance: Bootstrapping is a form of quality assurance for compilers. It ensures that the compiler is self-consistent and capable of compiling its own source code without external dependencies.

-

Maintainability: Bootstrapping makes the compiler more maintainable because changes to the compiler can be tested by recompiling itself. It also ensures that the compiler can adapt to new language standards or features.